Mở bài

Trong kỷ nguyên số hóa, vai trò của mạng xã hội trong việc lan truyền thông tin sai lệch đã trở thành một chủ đề nóng bỏng và thường xuyên xuất hiện trong bài thi IELTS Reading. Chủ đề “Social media’s role in misinformation campaigns” không chỉ phản ánh xu hướng xã hội hiện đại mà còn đòi hỏi thí sinh có khả năng phân tích, so sánh và đánh giá thông tin phức tạp.

Bài viết này cung cấp cho bạn một bộ đề thi IELTS Reading hoàn chỉnh với 3 passages theo đúng chuẩn quốc tế, bao gồm độ khó tăng dần từ Easy đến Hard. Bạn sẽ được luyện tập với đầy đủ các dạng câu hỏi phổ biến như Multiple Choice, True/False/Not Given, Matching Headings, và nhiều dạng khác. Mỗi câu hỏi đều có đáp án chi tiết kèm giải thích cụ thể về vị trí thông tin và kỹ thuật paraphrase, giúp bạn hiểu rõ cách tiếp cận bài thi.

Đặc biệt, bài viết còn tổng hợp từ vựng quan trọng theo từng passage với bảng tra cứu chi tiết, giúp bạn nâng cao vốn từ học thuật. Bộ đề này phù hợp cho học viên từ band 5.0 trở lên, đặc biệt hữu ích cho những ai đang hướng tới mục tiêu band 7.0+.

1. Hướng dẫn làm bài IELTS Reading

Tổng Quan Về IELTS Reading Test

Bài thi IELTS Reading kéo dài 60 phút với 3 passages và tổng cộng 40 câu hỏi. Mỗi câu trả lời đúng được tính 1 điểm, không có điểm âm cho câu sai. Độ khó tăng dần từ Passage 1 đến Passage 3, đòi hỏi bạn phải phân bổ thời gian hợp lý.

Phân bổ thời gian khuyến nghị:

- Passage 1: 15-17 phút (độ khó Easy, band 5.0-6.5)

- Passage 2: 18-20 phút (độ khó Medium, band 6.0-7.5)

- Passage 3: 23-25 phút (độ khó Hard, band 7.0-9.0)

Lưu ý quan trọng: Bạn cần tự chuyển đáp án vào Answer Sheet trong 60 phút, không có thời gian bổ sung. Do đó, hãy luyện tập kỹ năng quản lý thời gian ngay từ đầu.

Các Dạng Câu Hỏi Trong Đề Này

Đề thi mẫu này bao gồm 7 dạng câu hỏi phổ biến nhất trong IELTS Reading:

- Multiple Choice – Câu hỏi trắc nghiệm nhiều lựa chọn

- True/False/Not Given – Xác định thông tin đúng/sai/không được đề cập

- Yes/No/Not Given – Xác định ý kiến tác giả

- Matching Headings – Nối tiêu đề với đoạn văn

- Summary Completion – Hoàn thành tóm tắt

- Matching Features – Nối thông tin với đặc điểm

- Short-answer Questions – Câu hỏi trả lời ngắn

Mỗi dạng câu hỏi đòi hỏi kỹ năng và chiến lược riêng, được giải thích chi tiết trong phần đáp án.

2. IELTS Reading Practice Test

PASSAGE 1 – The Digital Spread of False Information

Độ khó: Easy (Band 5.0-6.5)

Thời gian đề xuất: 15-17 phút

Social media platforms have fundamentally transformed the way information circulates in modern society. What once required traditional media channels such as newspapers, television, or radio can now be shared with millions of people within seconds through a simple click. While this democratization of information has brought numerous benefits, allowing ordinary citizens to participate in public discourse and share their experiences, it has also created unprecedented challenges regarding the authenticity and reliability of content.

Misinformation, defined as false or inaccurate information regardless of intent to deceive, spreads remarkably quickly on social media platforms. A study conducted by the Massachusetts Institute of Technology in 2018 found that false news stories are 70% more likely to be retweeted than true stories, and they reach 1,500 people six times faster than accurate information. This alarming trend can be attributed to several factors inherent in social media design and human psychology.

Firstly, social media algorithms are programmed to prioritize engagement rather than accuracy. Content that generates strong emotional responses—whether anger, fear, or excitement—is more likely to appear in users’ feeds. Sensational headlines and provocative claims naturally attract more attention than nuanced, fact-based reporting. This creates a perverse incentive structure where misleading content receives greater visibility and reach than truthful information.

Secondly, the echo chamber effect amplifies misinformation within like-minded communities. Social media platforms use sophisticated algorithms to show users content similar to what they have previously engaged with. Over time, individuals become increasingly isolated from diverse perspectives and are primarily exposed to information that confirms their existing beliefs. This confirmation bias makes people more susceptible to accepting false information that aligns with their worldview while dismissing contradictory evidence.

The human element plays a crucial role in misinformation spread as well. People tend to share content based on headlines without reading full articles—a phenomenon known as “headline sharing.” Research indicates that approximately 59% of links shared on social media have never been clicked by the person sharing them. This superficial engagement with content allows misleading headlines to circulate widely even when the article itself might contain more balanced information.

Moreover, the anonymity and low barrier to entry on social media platforms enable malicious actors to create fake accounts and coordinate campaigns to spread false narratives. These coordinated inauthentic behavior networks can artificially inflate the perceived popularity of certain messages, making them appear more credible and widely accepted than they actually are. During the 2016 U.S. presidential election, for instance, researchers identified thousands of automated accounts, or “bots,” that amplified divisive content and conspiracy theories.

The consequences of social media misinformation extend far beyond the digital realm. False health information has led people to reject life-saving vaccines or pursue dangerous alternative treatments. Political misinformation can undermine democratic processes and fuel social divisions. During the COVID-19 pandemic, the World Health Organization identified an “infodemic“—an overabundance of information, both accurate and false—that made it difficult for people to find trustworthy guidance when they needed it most.

Addressing this challenge requires multi-faceted approaches. Social media companies have begun implementing fact-checking partnerships, adding warning labels to disputed content, and adjusting algorithms to reduce the spread of misinformation. However, critics argue these efforts are insufficient and often inconsistently applied. Some advocate for greater transparency in algorithmic functioning, while others call for stronger regulations requiring platforms to take responsibility for content hosted on their services.

Educational initiatives focused on digital literacy represent another crucial component of the solution. Teaching people to critically evaluate sources, recognize manipulation techniques, and verify information before sharing can help create a more discerning audience. Several countries have introduced media literacy programs in schools, while non-profit organizations offer online courses and resources for adults.

Ultimately, combating social media misinformation requires cooperation among technology companies, governments, educators, and individual users. As these platforms continue to play an increasingly central role in how societies communicate and access information, developing effective strategies to ensure information integrity has become one of the most pressing challenges of our digital age.

Questions 1-6

Do the following statements agree with the information given in Reading Passage 1?

Write:

- TRUE if the statement agrees with the information

- FALSE if the statement contradicts the information

- NOT GIVEN if there is no information on this

- Traditional media channels are faster at spreading information than social media platforms.

- The MIT study found that false information reaches audiences more rapidly than true information.

- Social media algorithms are specifically designed to prevent the spread of false information.

- Approximately 59% of people share articles on social media without reading them completely.

- The World Health Organization created the term “infodemic” before the COVID-19 pandemic.

- All countries have now introduced digital literacy programs in their school curricula.

Questions 7-10

Complete the sentences below.

Choose NO MORE THAN TWO WORDS from the passage for each answer.

- Social media platforms use __ to determine which content appears in users’ feeds.

- When people mainly see information that confirms their existing beliefs, they experience __.

- Fake accounts on social media can create __ to spread false narratives systematically.

- Critics argue that social media companies should provide more __ about how their algorithms work.

Questions 11-13

Choose the correct letter, A, B, C, or D.

-

According to the passage, what makes false news spread faster than accurate information?

- A. It is written by professional journalists

- B. It creates strong emotional reactions

- C. It contains more factual details

- D. It is promoted by government agencies

-

What is the main purpose of echo chambers on social media?

- A. To expose users to diverse viewpoints

- B. To show users content similar to their previous engagement

- C. To verify the accuracy of information

- D. To reduce the time spent on platforms

-

According to the passage, what is one consequence of health misinformation?

- A. Increased funding for medical research

- B. Better communication between doctors and patients

- C. People rejecting vaccines that could save their lives

- D. More people studying medicine at universities

PASSAGE 2 – Psychological Mechanisms Behind Viral Misinformation

Độ khó: Medium (Band 6.0-7.5)

Thời gian đề xuất: 18-20 phút

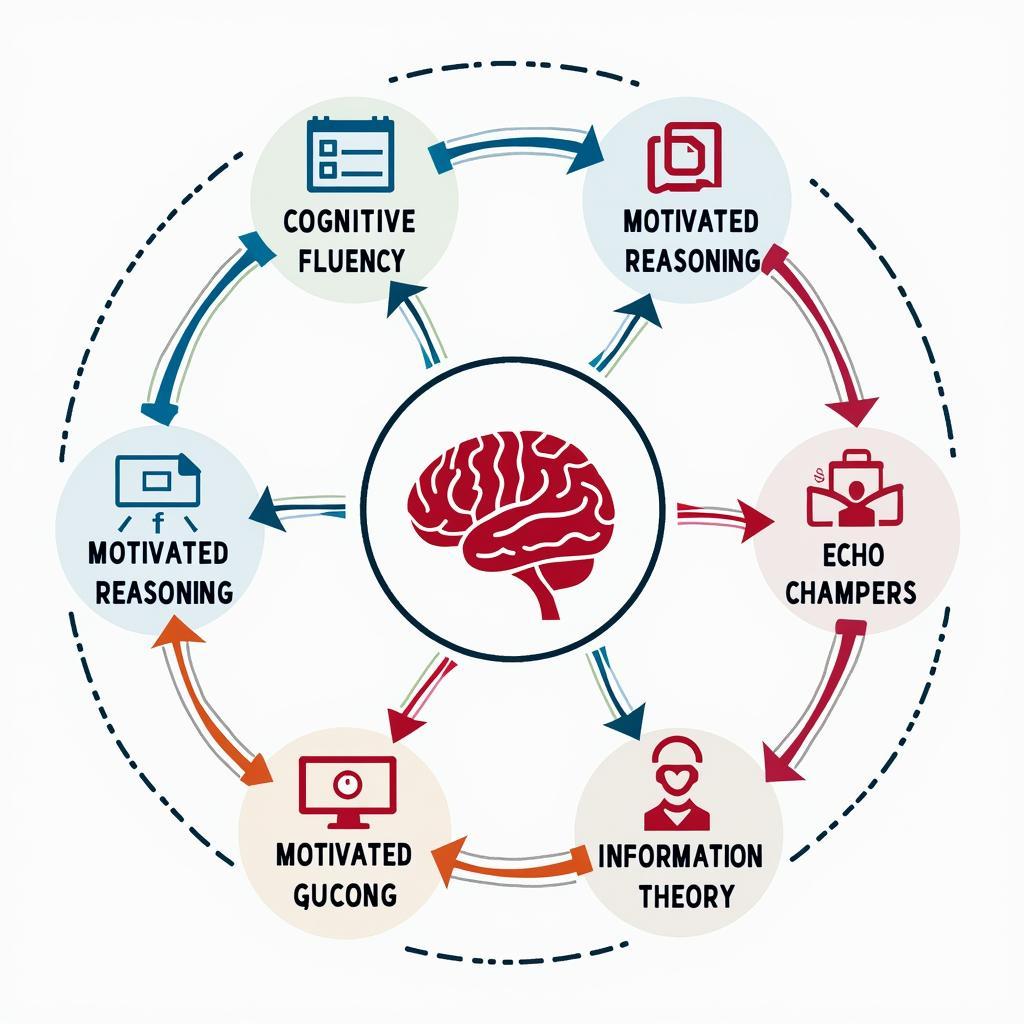

The phenomenon of misinformation spreading through social media networks represents a complex interplay between technological infrastructure and human cognitive biases. While technological solutions such as algorithmic content moderation and automated fact-checking have received considerable attention, understanding the psychological mechanisms that make individuals susceptible to false information is equally crucial for developing effective countermeasures.

Cognitive fluency—the ease with which information can be processed—plays a pivotal role in determining whether people accept or reject claims. Information presented in simple, clear language with familiar concepts tends to be perceived as more truthful than complex, nuanced explanations, regardless of actual accuracy. This fluency heuristic explains why oversimplified narratives and catchy slogans often prove more persuasive than detailed, evidence-based arguments that require sustained attention and critical thinking.

The phenomenon becomes more problematic when combined with the illusory truth effect, whereby repeated exposure to a statement increases its perceived credibility. Social media’s algorithmic amplification means users frequently encounter the same false claims multiple times through different sources within their network, creating an impression of widespread consensus. Các nghiên cứu gần đây về Mental health awareness through social media cho thấy việc lặp lại thông tin, dù sai lệch, có thể ảnh hưởng đến nhận thức cộng đồng về các vấn đề quan trọng. Experimental studies have demonstrated that people can come to believe false information even when they initially knew it to be incorrect, simply through repeated exposure over time.

Motivated reasoning represents another significant psychological barrier to accurate information processing. When confronted with evidence that contradicts their pre-existing beliefs or group identity, individuals often engage in biased evaluation, scrutinizing unwelcome information more critically while accepting supportive evidence with minimal skepticism. This asymmetrical processing creates a self-reinforcing cycle where exposure to contradictory evidence may paradoxically strengthen rather than weaken false beliefs—a phenomenon researchers term the “backfire effect.”

Social identity theory provides additional insight into why misinformation campaigns prove so effective. People derive significant portions of their self-concept from group memberships, whether political, religious, or cultural. When misinformation aligns with group values or demonizes perceived out-groups, accepting and sharing such content becomes an act of social affiliation and identity expression. Tương tự như cách Impact of social media on activism tạo ra sự gắn kết cộng đồng, misinformation có thể khai thác cùng cơ chế tâm lý này để lan truyền nhanh chóng trong các nhóm. The social rewards of in-group approval can outweigh concerns about factual accuracy, particularly in highly polarized environments.

The availability heuristic further complicates matters. This cognitive shortcut leads people to judge the likelihood or importance of events based on how easily examples come to mind. Dramatic, emotionally charged misinformation tends to be more memorable and mentally accessible than accurate but mundane information. Consequently, individuals may overestimate the prevalence of events highlighted by false viral content while underestimating more common but less sensational realities.

Source confusion and misattribution of memory also contribute to misinformation’s effectiveness. After encountering information through social media, people often remember the content while forgetting the source or the context in which they encountered it. A claim initially seen with a fact-checking warning label might later be recalled without that crucial qualifier, effectively transforming corrected misinformation into accepted fact in the individual’s memory.

The phenomenon of “information laundering” exploits these psychological vulnerabilities systematically. Coordinated campaigns introduce false narratives through low-credibility sources, then use network amplification to move this content through progressively more mainstream channels. By the time average users encounter the information, it appears to come from multiple independent sources, creating an illusion of corroboration that bypasses normal skepticism.

Research into emotional contagion reveals how misinformation leveraging strong emotions spreads more effectively than neutral content. Anger and anxiety prove particularly potent in driving engagement and sharing behavior. Misinformation campaigns frequently employ outrage generation as a deliberate strategy, crafting narratives designed to provoke intense emotional responses that override rational evaluation. Việc xem xét The impact of social media on public opinion cũng cho thấy cảm xúc đóng vai trò then chốt trong việc định hình quan điểm tập thể, một điểm yếu mà các chiến dịch thông tin sai lệch thường khai thác.

Understanding these psychological mechanisms has important implications for intervention design. Traditional fact-checking, while valuable, may prove insufficient when addressing deeply entrenched beliefs supported by motivated reasoning and social identity concerns. More promising approaches include “inoculation” strategies that expose people to weakened forms of misinformation techniques, building cognitive resistance similar to how vaccines build immunity. Pre-bunking rather than debunking—warning people about manipulation tactics before they encounter specific false claims—shows particular promise in experimental settings.

Additionally, interventions that address the social and emotional functions misinformation serves may prove more effective than those focusing solely on factual corrections. Approaches that acknowledge legitimate concerns, provide alternative narratives that satisfy psychological needs, and create opportunities for constructive group identity expression may help reduce receptivity to false content without triggering defensive reactions.

The challenge remains formidable. Social media platforms have created an information environment that systematically exploits cognitive vulnerabilities that evolved in very different contexts. Developing effective responses requires not only technological solutions but also deeper understanding of human psychology and how it interacts with digital communication infrastructure.

Các cơ chế tâm lý đằng sau sự lan truyền thông tin sai lệch trên mạng xã hội trong kỳ thi IELTS Reading

Các cơ chế tâm lý đằng sau sự lan truyền thông tin sai lệch trên mạng xã hội trong kỳ thi IELTS Reading

Questions 14-18

Choose the correct letter, A, B, C, or D.

-

According to the passage, cognitive fluency means:

- A. The ability to speak multiple languages

- B. How easily information can be understood

- C. The speed of reading comprehension

- D. Intelligence quotient measurement

-

What is the “illusory truth effect”?

- A. The tendency to believe true information

- B. The ability to detect false claims

- C. Believing something is true through repeated exposure

- D. The skill of identifying misinformation sources

-

The “backfire effect” refers to:

- A. Correcting misinformation successfully

- B. False beliefs becoming stronger when challenged with evidence

- C. Social media algorithms failing to work

- D. People changing their opinions after seeing facts

-

What role does social identity play in spreading misinformation?

- A. It has no connection to belief formation

- B. It prevents people from sharing false information

- C. Sharing misinformation becomes an act of group belonging

- D. It only affects young social media users

-

“Pre-bunking” is described as:

- A. Correcting false information after people believe it

- B. Warning people about manipulation tactics before they encounter false claims

- C. Deleting misinformation from social media

- D. Blocking users who share false content

Questions 19-23

Complete the summary below.

Choose NO MORE THAN TWO WORDS from the passage for each answer.

Misinformation spreads effectively due to several psychological factors. The availability heuristic causes people to judge events based on how easily they come to mind, making (19) __ content more memorable. Additionally, (20) __ occurs when people remember information but forget its source or context. Coordinated campaigns use (21) __ to move false narratives through increasingly credible channels. Misinformation also exploits (22) __, with anger and anxiety being particularly effective at driving sharing behavior. Intervention strategies like (23) __ expose people to weakened forms of manipulation techniques to build resistance.

Questions 24-26

Do the following statements agree with the claims of the writer in Reading Passage 2?

Write:

- YES if the statement agrees with the claims of the writer

- NO if the statement contradicts the claims of the writer

- NOT GIVEN if it is impossible to say what the writer thinks about this

- Simple explanations are always more accurate than complex ones.

- Fact-checking alone may not be sufficient to address strongly held false beliefs.

- Younger generations are more susceptible to misinformation than older adults.

PASSAGE 3 – The Sociotechnical Architecture of Misinformation Ecosystems

Độ khó: Hard (Band 7.0-9.0)

Thời gian đề xuất: 23-25 phút

The proliferation of misinformation through digital social networks constitutes a paradigmatic example of how emergent properties of complex sociotechnical systems can generate outcomes that transcend the intentions of individual actors or the explicit design of technological infrastructure. Understanding this phenomenon requires moving beyond reductionist frameworks that attribute causality exclusively to either malicious human actors or algorithmic functions, instead adopting a systemic perspective that examines how technological affordances, economic incentives, regulatory environments, and sociocultural contexts interact to create conditions conducive to information disorder.

The attention economy that undergirds contemporary social media platforms creates structural imperatives that inadvertently privilege engaging content over accurate information. Platform business models predicated on advertising revenue necessitate maximizing user engagement metrics—time spent on platform, content interactions, and data harvesting opportunities. Algorithmic curation systems optimized toward these engagement objectives demonstrably favor content that triggers strong emotional responses, facilitates parasocial relationships, and encourages compulsive usage patterns. While platforms publicly disavow responsibility for information quality, their technical architectures instantiate what could be termed “algorithmic governance“—systematic shaping of information environments through computationally mediated selection and ranking.

This technological infrastructure intersects with what scholars term the “participatory disinformation” phenomenon, wherein ordinary users—not coordinated campaigns or state actors—constitute the primary vectors of misinformation dissemination. Research tracking information cascades during significant events reveals that genuine grassroots spread, motivated by sincerely held beliefs rather than malicious intent, accounts for the majority of misinformation exposure. This observation complicates intervention strategies predicated on identifying and eliminating intentional disinformation sources, as it suggests the problem is more diffuse and decentralized than often assumed.

The epistemic challenges posed by social media misinformation extend beyond the spread of discrete false claims to encompass what information theorists describe as “strategic truth decay“—the deliberate cultivation of generalized skepticism toward authoritative information sources and institutional knowledge production. Rather than persuading audiences of specific false narratives, sophisticated influence operations often aim to create an environment of radical epistemic uncertainty, where individuals become unable to distinguish reliable from unreliable information sources. Những bài học từ Social media’s role in mental health awareness cho thấy việc xây dựng lòng tin với nguồn tin đáng tin cậy là cực kỳ quan trọng trong môi trường thông tin phức tạp này. This “flooding” strategy proves particularly effective because cognitive resources required to evaluate individual claims become overwhelmed, leading to wholesale retreat from engagement with political or scientific information—a state researchers term “information avoidance.”

The cross-platform ecosystem dynamics further complicate mitigation efforts. Misinformation rarely remains confined to single platforms but instead circulates through heterogeneous media environments, mutating and adapting to different technological affordances and community norms. A narrative might originate on fringe imageboards, gain traction in ideologically aligned online forums, achieve algorithmic amplification on mainstream social media, and ultimately receive coverage in traditional media outlets reporting on viral content. This multi-platform pipeline creates opportunities for information laundering, whereby dubious content acquires veneer of legitimacy through strategic platform migration.

Network topology also plays a crucial role in misinformation dynamics. Social media platforms exhibit scale-free network characteristics, with small numbers of highly connected nodes (influencers, popular accounts) wielding disproportionate influence over information diffusion patterns. These structural inequalities in attention distribution mean that strategic compromise or manipulation of key network nodes can achieve widespread impact. Simultaneously, the tendency toward homophilic clustering—users connecting primarily with ideologically similar others—creates segmented information ecosystems with limited cross-cutting exposure to diverse perspectives.

From a governance perspective, addressing social media misinformation presents profound institutional challenges. The transnational nature of digital platforms complicates regulatory jurisdiction, as companies can strategically locate operations in permissive legal environments while serving global user bases. The tension between content moderation and freedom of expression remains contentious, with legitimate concerns about censorship and platform power counterbalancing demands for greater accountability regarding information quality. Different democratic societies have adopted divergent approaches, from the European Union’s co-regulatory frameworks emphasizing platform responsibility to the United States’ intermediary liability protections that largely exempt platforms from legal accountability for user-generated content.

Technocratic solutions emphasizing automated detection and removal of misinformation face significant limitations. Machine learning classifiers trained to identify false content struggle with contextual nuance, satirical content, and rapidly evolving manipulation techniques. False positive rates remain problematic, with disproportionate impacts on marginalized communities whose expressions may be flagged incorrectly due to training data biases. Moreover, over-reliance on technological fixes may divert attention from deeper structural issues regarding media literacy, educational systems, and civic infrastructure that shape populations’ information consumption practices.

Recent scholarship emphasizes multi-stakeholder approaches that distribute responsibility across technology companies, governmental bodies, civil society organizations, educational institutions, and individual users. Platform transparency initiatives, requiring disclosure of algorithmic functioning and content moderation practices, aim to create accountability mechanisms without necessarily imposing specific content restrictions. Public interest algorithms—alternative curation systems optimized for information quality rather than engagement—represent another avenue for exploration, though questions remain regarding who defines “public interest” and through what processes.

Educational interventions focused on lateral reading strategies—teaching users to evaluate source credibility by investigating what other sources say about them rather than remaining within original sites—show particular promise in improving digital information literacy. Such approaches acknowledge that comprehensive fact-checking of all encountered information remains cognitively impractical, instead focusing on developing efficient heuristics for credibility assessment. Additionally, prebunking campaigns that familiarize populations with common manipulation techniques may build cognitive resilience more effectively than reactive debunking of specific false claims.

Ultimately, the challenge of social media misinformation reflects deeper tensions within contemporary information ecosystems regarding epistemic authority, technological governance, and the social organization of knowledge production. Effective responses will require not merely technical solutions but fundamental reconsideration of how digital technologies mediate collective sense-making and what institutional arrangements can best support information integrity while preserving beneficial affordances of participatory digital culture. The stakes extend beyond correction of discrete false claims to encompass the foundational question of whether democratic societies can maintain shared epistemic foundations necessary for collective decision-making in increasingly fragmented information environments.

Kiến trúc công nghệ xã hội của hệ sinh thái thông tin sai lệch trong bài thi IELTS Reading nâng cao

Kiến trúc công nghệ xã hội của hệ sinh thái thông tin sai lệch trong bài thi IELTS Reading nâng cao

Questions 27-31

Choose the correct letter, A, B, C, or D.

-

According to the passage, what is a “reductionist framework”?

- A. A comprehensive approach to understanding complex systems

- B. An oversimplified view that attributes causality to single factors

- C. A method for detecting misinformation automatically

- D. A social media algorithm that ranks content

-

The “attention economy” refers to:

- A. A business model based on maximizing user engagement

- B. A type of psychological disorder

- C. Government regulation of social media

- D. Educational programs about focus

-

“Participatory disinformation” means:

- A. Only government actors spread false information

- B. Misinformation is always spread intentionally

- C. Ordinary users are the main spreaders of misinformation

- D. All social media content is false

-

What is “strategic truth decay”?

- A. Information naturally becoming outdated over time

- B. Deliberate creation of skepticism toward authoritative sources

- C. The physical deterioration of documents

- D. A scientific process of verification

-

According to the passage, what is a limitation of automated misinformation detection?

- A. It is 100% accurate in all cases

- B. It struggles with context, satire, and evolving techniques

- C. It only works on one social media platform

- D. It requires no human oversight

Questions 32-37

Complete the summary using the list of phrases, A-L, below.

The challenge of social media misinformation involves complex interactions between technology, economics, and society. Platform business models based on (32) __ create incentives to prioritize engaging content. Unlike coordinated campaigns, most misinformation spreads through (33) __ by ordinary users who genuinely believe the content. Sophisticated influence operations aim to create (34) __ rather than convince people of specific falsehoods. Misinformation moves through (35) __, adapting to different platform characteristics. The (36) __ of social networks means a few influential accounts have disproportionate impact. Effective solutions require (37) __ involving technology companies, governments, and educational institutions.

A. advertising revenue

B. government funding

C. grassroots spread

D. professional journalists

E. radical epistemic uncertainty

F. complete trust

G. multi-platform pipelines

H. single websites

I. scale-free topology

J. equal distribution

K. multi-stakeholder approaches

L. individual solutions

Questions 38-40

Answer the questions below.

Choose NO MORE THAN THREE WORDS from the passage for each answer.

-

What type of reading strategy involves evaluating sources by checking what other sources say about them?

-

What term describes campaigns that teach people about manipulation techniques before they encounter misinformation?

-

According to the passage, what do democratic societies need to maintain for effective collective decision-making?

3. Answer Keys – Đáp Án

PASSAGE 1: Questions 1-13

- FALSE

- TRUE

- FALSE

- TRUE

- NOT GIVEN

- NOT GIVEN

- algorithms / sophisticated algorithms

- confirmation bias

- coordinated campaigns / coordinate campaigns

- transparency

- B

- B

- C

PASSAGE 2: Questions 14-26

- B

- C

- B

- C

- B

- emotionally charged / emotional

- source confusion

- information laundering

- emotional contagion

- inoculation

- NO

- YES

- NOT GIVEN

PASSAGE 3: Questions 27-40

- B

- A

- C

- B

- B

- A

- C

- E

- G

- I

- K

- lateral reading (strategies)

- prebunking campaigns

- shared epistemic foundations

4. Giải Thích Đáp Án Chi Tiết

Passage 1 – Giải Thích

Câu 1: FALSE

- Dạng câu hỏi: True/False/Not Given

- Từ khóa: Traditional media channels, faster, spreading information, social media

- Vị trí trong bài: Đoạn 1, dòng 1-3

- Giải thích: Bài viết nói rằng “What once required traditional media channels… can now be shared with millions of people within seconds” – điều này cho thấy social media NHANH HƠN traditional media, trái ngược với câu hỏi.

Câu 2: TRUE

- Dạng câu hỏi: True/False/Not Given

- Từ khóa: MIT study, false information, reaches audiences, rapidly, true information

- Vị trí trong bài: Đoạn 2, dòng 2-4

- Giải thích: “they reach 1,500 people six times faster than accurate information” – khớp chính xác với ý nghĩa câu hỏi, chỉ paraphrase “rapidly” = “faster”.

Câu 3: FALSE

- Dạng câu hỏi: True/False/Not Given

- Từ khóa: Social media algorithms, designed to prevent, false information

- Vị trí trong bài: Đoạn 3, dòng 1-2

- Giải thích: “social media algorithms are programmed to prioritize engagement rather than accuracy” – algorithms ưu tiên engagement chứ KHÔNG được thiết kế để ngăn chặn thông tin sai.

Câu 4: TRUE

- Dạng câu hỏi: True/False/Not Given

- Từ khóa: 59%, share articles, without reading

- Vị trí trong bài: Đoạn 5, dòng 3-4

- Giải thích: “approximately 59% of links shared on social media have never been clicked by the person sharing them” – khớp chính xác với số liệu và ý nghĩa.

Câu 7: algorithms / sophisticated algorithms

- Dạng câu hỏi: Sentence Completion

- Từ khóa: determine which content appears, users’ feeds

- Vị trí trong bài: Đoạn 3, dòng 1-2 và Đoạn 4, dòng 2-3

- Giải thích: Bài viết đề cập “social media algorithms” và “sophisticated algorithms” được dùng để quyết định nội dung hiển thị.

Câu 11: B

- Dạng câu hỏi: Multiple Choice

- Từ khóa: false news spread faster, accurate information

- Vị trí trong bài: Đoạn 3, dòng 3-5

- Giải thích: “Content that generates strong emotional responses… is more likely to appear” – nội dung tạo phản ứng cảm xúc mạnh (emotional reactions) được ưu tiên, khiến tin giả lan nhanh hơn.

Câu 13: C

- Dạng câu hỏi: Multiple Choice

- Từ khóa: consequence, health misinformation

- Vị trí trong bài: Đoạn 7, dòng 1-2

- Giải thích: “False health information has led people to reject life-saving vaccines” – paraphrase của đáp án C “rejecting vaccines that could save their lives”.

Passage 2 – Giải Thích

Câu 14: B

- Dạng câu hỏi: Multiple Choice

- Từ khóa: cognitive fluency means

- Vị trí trong bài: Đoạn 2, dòng 1-2

- Giải thích: “the ease with which information can be processed” – định nghĩa chính xác là “how easily information can be understood”.

Câu 16: B

- Dạng câu hỏi: Multiple Choice

- Từ khóa: backfire effect refers to

- Vị trí trong bài: Đoạn 4, dòng cuối

- Giải thích: “exposure to contradictory evidence may paradoxically strengthen rather than weaken false beliefs” – khi được chỉ ra bằng chứng ngược lại, niềm tin sai lầm lại mạnh hơn.

Câu 18: B

- Dạng câu hỏi: Multiple Choice

- Từ khóa: Pre-bunking described as

- Vị trí trong bài: Đoạn 10, dòng 4-5

- Giải thích: “Pre-bunking rather than debunking—warning people about manipulation tactics before they encounter specific false claims” – cảnh báo TRƯỚC KHI gặp thông tin sai.

Câu 19: emotionally charged / emotional

- Dạng câu hỏi: Summary Completion

- Từ khóa: availability heuristic, memorable content

- Vị trí trong bài: Đoạn 6, dòng 3-4

- Giải thích: “Dramatic, emotionally charged misinformation tends to be more memorable” – từ cần điền là “emotionally charged”.

Câu 22: emotional contagion

- Dạng câu hỏi: Summary Completion

- Từ khóa: anger and anxiety, driving sharing behavior

- Vị trí trong bài: Đoạn 9, dòng 1-2

- Giải thích: “Research into emotional contagion reveals how misinformation leveraging strong emotions spreads more effectively”.

Câu 25: YES

- Dạng câu hỏi: Yes/No/Not Given

- Từ khóa: Fact-checking alone, not sufficient, strongly held false beliefs

- Vị trí trong bài: Đoạn 10, dòng 1-3

- Giải thích: “Traditional fact-checking, while valuable, may prove insufficient when addressing deeply entrenched beliefs” – tác giả khẳng định fact-checking một mình không đủ.

Passage 3 – Giải Thích

Câu 27: B

- Dạng câu hỏi: Multiple Choice

- Từ khóa: reductionist framework

- Vị trí trong bài: Đoạn 1, dòng 3-4

- Giải thích: “moving beyond reductionist frameworks that attribute causality exclusively to either malicious human actors or algorithmic functions” – framework đơn giản hóa vấn đề phức tạp xuống một nguyên nhân duy nhất.

Câu 29: C

- Dạng câu hỏi: Multiple Choice

- Từ khóa: Participatory disinformation means

- Vị trí trong bài: Đoạn 3, dòng 1-3

- Giải thích: “ordinary users—not coordinated campaigns or state actors—constitute the primary vectors of misinformation dissemination” – người dùng bình thường là nguồn chính lan truyền thông tin sai.

Câu 30: B

- Dạng câu hỏi: Multiple Choice

- Từ khóa: strategic truth decay

- Vị trí trong bài: Đoạn 4, dòng 2-4

- Giải thích: “the deliberate cultivation of generalized skepticism toward authoritative information sources” – cố tình tạo ra sự hoài nghi đối với nguồn tin đáng tin cậy.

Câu 31: B

- Dạng câu hỏi: Multiple Choice

- Từ khóa: limitation, automated misinformation detection

- Vị trí trong bài: Đoạn 8, dòng 2-4

- Giải thích: “Machine learning classifiers… struggle with contextual nuance, satirical content, and rapidly evolving manipulation techniques” – các hạn chế được liệt kê rõ ràng.

Câu 32-37: (Summary Completion with word list)

- Vị trí và giải thích:

- 32 (A): “advertising revenue” – Đoạn 2, business models dựa trên quảng cáo

- 33 (C): “grassroots spread” – Đoạn 3, lan truyền tự phát bởi người dùng thường

- 34 (E): “radical epistemic uncertainty” – Đoạn 4, tạo sự hoài nghi triệt để

- 35 (G): “multi-platform pipelines” – Đoạn 5, di chuyển qua nhiều nền tảng

- 36 (I): “scale-free topology” – Đoạn 6, cấu trúc mạng không đều

- 37 (K): “multi-stakeholder approaches” – Đoạn 9, giải pháp đa bên liên quan

Câu 38: lateral reading (strategies)

- Dạng câu hỏi: Short Answer

- Từ khóa: reading strategy, checking what other sources say

- Vị trí trong bài: Đoạn 10, dòng 1-3

- Giải thích: “lateral reading strategies—teaching users to evaluate source credibility by investigating what other sources say about them”.

Câu 40: shared epistemic foundations

- Dạng câu hỏi: Short Answer

- Từ khóa: democratic societies need, collective decision-making

- Vị trí trong bài: Đoạn 11, dòng cuối

- Giải thích: “whether democratic societies can maintain shared epistemic foundations necessary for collective decision-making”.

5. Từ Vựng Quan Trọng Theo Passage

Passage 1 – Essential Vocabulary

| Từ vựng | Loại từ | Phiên âm | Nghĩa tiếng Việt | Ví dụ từ bài | Collocation |

|---|---|---|---|---|---|

| fundamentally transformed | adv + v | /ˌfʌndəˈmentəli trænsˈfɔːmd/ | thay đổi căn bản | “have fundamentally transformed the way information circulates” | fundamentally change/alter/reshape |

| democratization | n | /dɪˌmɒkrətaɪˈzeɪʃən/ | dân chủ hóa | “democratization of information” | democratization of knowledge/media/access |

| authenticity | n | /ˌɔːθenˈtɪsəti/ | tính xác thực | “regarding the authenticity and reliability of content” | verify/question/confirm authenticity |

| misinformation | n | /ˌmɪsɪnfəˈmeɪʃən/ | thông tin sai lệch | “Misinformation… spreads remarkably quickly” | spread/combat/debunk misinformation |

| alarming trend | adj + n | /əˈlɑːmɪŋ trend/ | xu hướng đáng báo động | “This alarming trend can be attributed to” | disturbing/worrying/concerning trend |

| echo chamber | n | /ˈekəʊ ˌtʃeɪmbə/ | buồng vang (môi trường thông tin kín) | “the echo chamber effect amplifies misinformation” | create/break/escape echo chambers |

| confirmation bias | n | /ˌkɒnfəˈmeɪʃən ˈbaɪəs/ | thiên kiến xác nhận | “This confirmation bias makes people more susceptible” | suffer from/exhibit/overcome confirmation bias |

| coordinate campaigns | v + n | /kəʊˈɔːdɪneɪt kæmˈpeɪnz/ | phối hợp các chiến dịch | “coordinate campaigns to spread false narratives” | launch/organize/run coordinated campaigns |

| undermine | v | /ˌʌndəˈmaɪn/ | làm suy yếu | “undermine democratic processes” | undermine efforts/confidence/authority |

| infodemic | n | /ɪnfəʊˈdemɪk/ | đại dịch thông tin | “identified an infodemic” | tackle/manage/face an infodemic |

| multi-faceted | adj | /ˌmʌltiˈfæsɪtɪd/ | đa chiều | “requires multi-faceted approaches” | multi-faceted problem/solution/approach |

| discerning | adj | /dɪˈsɜːnɪŋ/ | sáng suốt, phân biệt được | “create a more discerning audience” | discerning reader/consumer/viewer |

Passage 2 – Essential Vocabulary

| Từ vựng | Loại từ | Phiên âm | Nghĩa tiếng Việt | Ví dụ từ bài | Collocation |

|---|---|---|---|---|---|

| interplay | n | /ˈɪntəpleɪ/ | sự tương tác | “complex interplay between technological infrastructure and human cognitive biases” | interplay between/of factors |

| cognitive fluency | adj + n | /ˈkɒɡnətɪv ˈfluːənsi/ | sự trôi chảy nhận thức | “Cognitive fluency plays a pivotal role” | achieve/improve cognitive fluency |

| pivotal role | adj + n | /ˈpɪvətəl rəʊl/ | vai trò then chốt | “plays a pivotal role in determining” | play a pivotal/crucial/key role |

| oversimplified narratives | adj + n | /ˌəʊvəˈsɪmplɪfaɪd ˈnærətɪvz/ | những câu chuyện đơn giản hóa quá mức | “oversimplified narratives… prove more persuasive” | present/create oversimplified narratives |

| illusory truth effect | adj + n + n | /ɪˈluːsəri truːθ ɪˈfekt/ | hiệu ứng chân lý ảo giác | “the illusory truth effect, whereby repeated exposure” | demonstrate/exhibit illusory truth effect |

| algorithmic amplification | adj + n | /ˌælɡəˈrɪðmɪk ˌæmplɪfɪˈkeɪʃən/ | sự khuếch đại bằng thuật toán | “Social media’s algorithmic amplification” | result in/cause algorithmic amplification |

| motivated reasoning | adj + n | /ˈməʊtɪveɪtɪd ˈriːzənɪŋ/ | suy luận có động cơ | “Motivated reasoning represents another significant barrier” | engage in/exhibit motivated reasoning |

| backfire effect | n + n | /ˈbækfaɪər ɪˈfekt/ | hiệu ứng phản tác dụng | “a phenomenon researchers term the backfire effect” | avoid/observe/trigger backfire effect |

| social affiliation | adj + n | /ˈsəʊʃəl əˌfɪliˈeɪʃən/ | sự liên kết xã hội | “becomes an act of social affiliation” | seek/demonstrate social affiliation |

| availability heuristic | n + n | /əˌveɪləˈbɪləti hjʊəˈrɪstɪk/ | phương pháp suy đoán dựa vào tính sẵn có | “The availability heuristic further complicates” | rely on/use availability heuristic |

| source confusion | n + n | /sɔːs kənˈfjuːʒən/ | nhầm lẫn nguồn tin | “Source confusion and misattribution of memory” | lead to/create source confusion |

| information laundering | n + n | /ˌɪnfəˈmeɪʃən ˈlɔːndərɪŋ/ | “tẩy trắng” thông tin | “The phenomenon of information laundering exploits” | engage in/practice information laundering |

| emotional contagion | adj + n | /ɪˈməʊʃənəl kənˈteɪdʒən/ | sự lây lan cảm xúc | “Research into emotional contagion reveals” | spread through/exhibit emotional contagion |

| inoculation | n | /ɪˌnɒkjuˈleɪʃən/ | sự tiêm phòng (tâm lý) | “inoculation strategies that expose people” | provide/develop psychological inoculation |

| pre-bunking | n | /priː ˈbʌŋkɪŋ/ | phản bác trước | “Pre-bunking rather than debunking” | use/implement pre-bunking strategies |

Passage 3 – Essential Vocabulary

| Từ vựng | Loại từ | Phiên âm | Nghĩa tiếng Việt | Ví dụ từ bài | Collocation |

|---|---|---|---|---|---|

| proliferation | n | /prəˌlɪfəˈreɪʃən/ | sự gia tăng nhanh chóng | “The proliferation of misinformation” | rapid/massive proliferation |

| paradigmatic example | adj + n | /ˌpærədɪɡˈmætɪk ɪɡˈzɑːmpəl/ | ví dụ điển hình | “constitutes a paradigmatic example” | serve as/represent paradigmatic example |

| emergent properties | adj + n | /ɪˈmɜːdʒənt ˈprɒpətiz/ | thuộc tính nổi lên | “emergent properties of complex systems” | exhibit/display emergent properties |

| reductionist frameworks | adj + n | /rɪˈdʌkʃənɪst ˈfreɪmwɜːks/ | khuôn khổ giản lược | “moving beyond reductionist frameworks” | adopt/reject reductionist frameworks |

| technological affordances | adj + n | /ˌteknəˈlɒdʒɪkəl əˈfɔːdənsɪz/ | khả năng công nghệ cung cấp | “technological affordances… interact to create” | leverage/exploit technological affordances |

| attention economy | n + n | /əˈtenʃən iˈkɒnəmi/ | nền kinh tế chú ý | “The attention economy that undergirds” | operate in/shape attention economy |

| structural imperatives | adj + n | /ˈstrʌktʃərəl ɪmˈperətɪvz/ | những yêu cầu cấu trúc | “creates structural imperatives” | face/respond to structural imperatives |

| algorithmic governance | adj + n | /ˌælɡəˈrɪðmɪk ˈɡʌvənəns/ | quản trị bằng thuật toán | “what could be termed algorithmic governance” | implement/study algorithmic governance |

| participatory disinformation | adj + n | /pɑːˌtɪsɪˈpeɪtəri ˌdɪsɪnfəˈmeɪʃən/ | thông tin sai lệch có sự tham gia | “the participatory disinformation phenomenon” | combat/analyze participatory disinformation |

| epistemic challenges | adj + n | /ˌepɪˈstemɪk ˈtʃælɪndʒɪz/ | thách thức tri thức | “The epistemic challenges posed by” | address/face epistemic challenges |

| strategic truth decay | adj + n + n | /strəˈtiːdʒɪk truːθ dɪˈkeɪ/ | sự suy giảm chân lý có chiến lược | “strategic truth decay—the deliberate cultivation” | observe/study strategic truth decay |

| radical epistemic uncertainty | adj + adj + n | /ˈrædɪkəl ˌepɪˈstemɪk ʌnˈsɜːtənti/ | sự không chắc chắn tri thức triệt để | “create an environment of radical epistemic uncertainty” | generate/produce radical uncertainty |

| cross-platform ecosystem | adj + n | /krɒs ˈplætfɔːm ˈiːkəʊˌsɪstəm/ | hệ sinh thái xuyên nền tảng | “The cross-platform ecosystem dynamics” | navigate/analyze cross-platform ecosystem |

| scale-free network | adj + n | /skeɪl friː ˈnetwɜːk/ | mạng lưới phi tỷ lệ | “exhibit scale-free network characteristics” | form/create scale-free networks |

| homophilic clustering | adj + n | /ˌhɒməˈfɪlɪk ˈklʌstərɪŋ/ | sự tập hợp đồng nhất | “the tendency toward homophilic clustering” | observe/measure homophilic clustering |

| transnational nature | adj + n | /ˌtrænzˈnæʃənəl ˈneɪtʃə/ | bản chất xuyên quốc gia | “The transnational nature of digital platforms” | recognize/address transnational nature |

| lateral reading strategies | adj + n + n | /ˈlætərəl ˈriːdɪŋ ˈstrætədʒiz/ | chiến lược đọc ngang | “lateral reading strategies—teaching users” | teach/employ lateral reading strategies |

| cognitive resilience | adj + n | /ˈkɒɡnətɪv rɪˈzɪliəns/ | khả năng phục hồi nhận thức | “may build cognitive resilience” | develop/strengthen cognitive resilience |

Kết bài

Chủ đề “Social media’s role in misinformation campaigns” không chỉ là một topic nóng trong xã hội đương đại mà còn là một dạng bài Reading thường xuyên xuất hiện trong các kỳ thi IELTS gần đây. Bộ đề thi mẫu này đã cung cấp cho bạn trải nghiệm hoàn chỉnh với 3 passages có độ khó tăng dần, từ Easy (band 5.0-6.5) đến Medium (band 6.0-7.5) và Hard (band 7.0-9.0).

Thông qua 40 câu hỏi đa dạng với đầy đủ các dạng phổ biến nhất—Multiple Choice, True/False/Not Given, Yes/No/Not Given, Matching Headings, Summary Completion, Matching Features và Short-answer Questions—bạn đã có cơ hội rèn luyện kỹ năng đọc hiểu học thuật một cách toàn diện. Phần đáp án chi tiết với giải thích cụ thể về vị trí thông tin, kỹ thuật paraphrase và lý do tại sao đáp án đúng/sai sẽ giúp bạn tự đánh giá năng lực hiện tại và xác định những điểm cần cải thiện.

Đặc biệt, bộ từ vựng học thuật được tổng hợp theo từng passage với bảng tra cứu chi tiết sẽ là tài liệu quý giá giúp bạn nâng cao vốn từ và cải thiện khả năng hiểu các bài đọc phức tạp. Hãy thường xuyên luyện tập với các đề thi mẫu như thế này, chú ý quản lý thời gian và phát triển các kỹ thuật đọc hiệu quả như skimming, scanning và lateral reading để đạt được band điểm mục tiêu trong kỳ thi IELTS Reading.

[…] hiểu nhanh, xác định thông tin chính xác và quản lý thời gian khéo léo. Như cách vai trò của truyền thông xã hội trong các chiến dịch thông tin sai lệch đòi hỏi kỹ năng phân tích phức tạp, IELTS Reading cũng yêu cầu bạn phát triển […]